There are a few things everybody knows about twins. They always come in pairs, and they are never precisely identical. When it comes to the industrial technology of digital twins, therefore, it is not surprising that there are two prominent definitions with many similarities, but a few significant differences.

Digital twins are an important component of cyber-physical industrial systems – the technology as important to the ongoing fourth industrial revolution as steam power was to the first in the 18th and 19th centuries. However, although virtually every vendor offering hardware or software that contributes to cyber-physical systems claims that they are compatible with digital twins, they all use their own definition. Two which stand out as having significant commonalities come from technology research and consulting company Gartner and the Digital Twin Consortium, a grouping of academia, government bodies, and industry aiming to accelerate the development, adoption, interoperability, and security of digital twin enabling technologies.

Digital twins in two definitions

According to Gartner, “all digital twins have two primary roles for improving business outcomes.” One of those roles is to improve situational awareness: “Functionally, all digital twins — at a minimum — monitor data from things to improve our situational awareness.” In practice, this means that a digital twin will represent, in some way, the operation of a process using data gathered from sensors that monitor the elements of the process – for example, machines on a production line – to tell operators what is happening in their process at any time, and which can be analyzed to provide insights into how the process could be optimized to increase output and improve environmental performance. If the digital twin is connected to actuators in the factory, this optimization can even happen automatically. The representation may be graphical – a flow diagram of the process or a 3D rendering of a factory production line – or it may be just a series of changing numbers on a table or dashboard.

The Digital Twin Consortium, on the other hand, defines a digital twin as “a virtual representation of real-world entities and processes, synchronized at a specified frequency and fidelity” which use “real-time and historical data to represent the past and present and simulate predicted futures”. This definition does not insist on the live link to sensors specified by Gartner. For the Digital Twin Consortium, it can be updated hourly, daily, weekly, or even monthly, as long as the updating frequency is specified.

What comprises a digital twin?

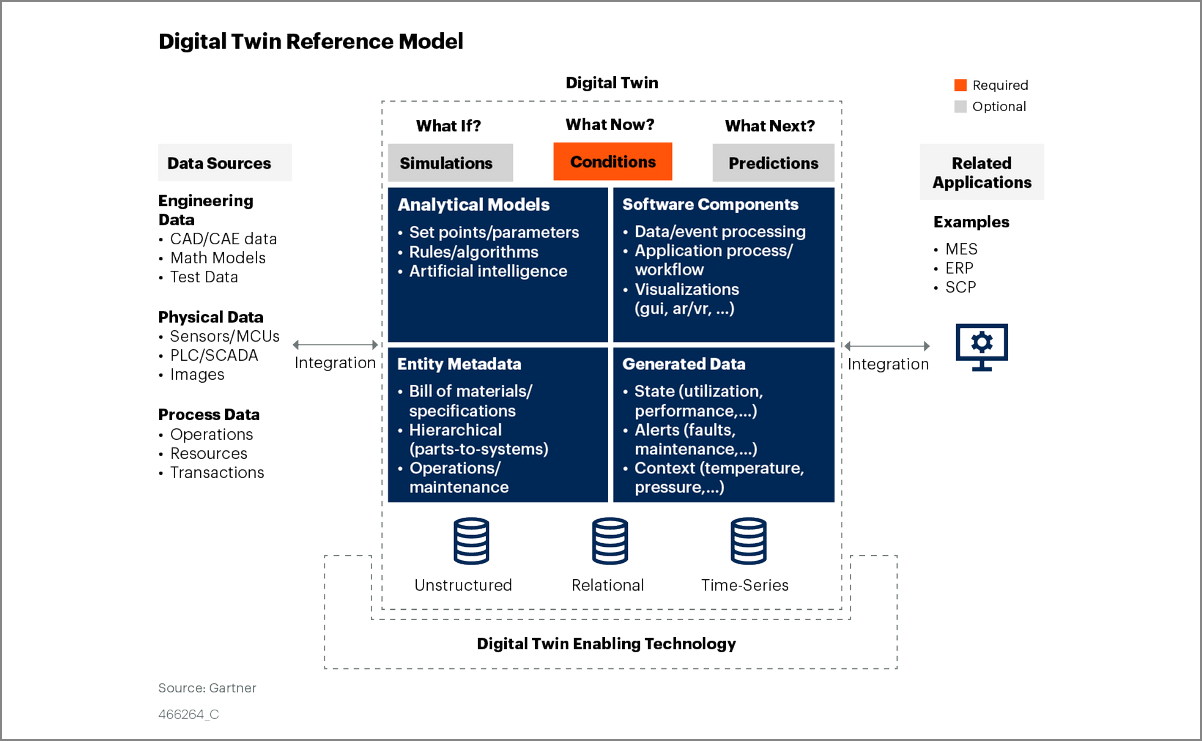

The Gartner model rests on three “pillars” and is built from four “blocks”. The pillars are “what if?” – in other words, simulations of how the system would react to certain input conditions; “what now?” – how is the system currently behaving, derived from live data from distributed sensors; and “what next?” – how will the system behave in the future, for example, when are components likely to fail?

The blocks are the data and software needed to derive the pillars. First, the system has to be defined through “entity metadata” – this is all the information about each component of the system which does not change. It might include serial numbers of machinery, physical sizes, and a detailed physical or graphical model. Selecting the right amount of metadata is crucial – too little, and the digital twin will not identify the equipment accurately; too much, and superfluous information might overwhelm and mask the vital facts. Once the system is defined, it needs to monitor and record “generated data” – all the information about the state of the system components, gathered by the distributed sensor network of the Internet of Things-enabled devices making up the plant or other system being twinned. Again, achieving the right granularity is crucial.

Once you have all this data, you need to do something with it, and this is where the other two blocks come in. The first of these blocks is “analytical models” – defined as “virtual representations of the behavior of twinned entities that improve situational awareness”. The most important thing about these models is that they must link the generated data with the operational purpose of the digital twin; for example, the energy cost of running a machine-tool at a certain speed, or the effect on output of a change in the process.

The final block is “software components” which, in Gartner’s definition, “choreograph data ingestion, analytics, event generation, and – if needed - any visualization and workflow (for automating business responses)”. Examples of software components might be a system to create and schedule maintenance tasks in response to the digital twin’s generated data or an analytical model, or it could be a third-party graphical rendering package or even a gaming engine, to produce an augmented or virtual reality visualization of the plant.

Under the Digital Twin Consortium definition, however, the reference to real-time and historical data representing the past and present and simulating the future implies that it regards all three pillars as crucial, the difference lying in the way the data is gathered and the digital twin updated.

How to power a digital twin using spatial data

The input of spatial data systems such as those provided by NavVis to digital twins can be categorized as contributing to both the entity metadata and software components blocks. Dr. Lorenz Lachauer, Head of Solutions at NavVis, explains. “On the one end, NavVis delivers data, which is being generated with our indoor mapping systems, e.g., the NavVis VLX. Within the Digital Twin definition of Gartner, this data, consisting of point clouds and panoramic images, fits into the category of external data sources, more specifically ‘physical data’. Furthermore, the digital twin is composed of four essential building blocks, one of them being software components. And in there, there are visualization components, and that is where NavVis IVION fits into.”

Lachauer stressed that in all cases, NavVis systems are concerned with the factory or process plant and not with the product. It can link real-time data with spatial data/visualizations of the related machines and provides accurate point cloud data and panoramic imagery and gives the user the ability to interact with it, in order to provide a location and physical shape and size information for a visual representation of the manufacturing operation, to which the generated data can then be assigned for ease of navigating around the system and identifying the precise sites that might need attention at any given time.

“Digital twins are never done by just one manufacturer,” Lachauer comments. “Every software or hardware producer in the industry claims ‘we do a digital twin’. But we can see from the Gartner definition that this is something which is composed of at least a few components.” There are, he says, an increasing number of IoT companies that are developing and offering systems that provide sensors, live data, and connectivity, but they do not provide a good visualization of the manufacturing facility. “Therefore, if you bring in an IoT and sensor data and combine it with more accurate visual data, then you might have a comprehensive and useful digital twin.”

Member of Lachauer’s team Phillip Quadstege, Senior Solution Manager, linked the role of NavVis back to the Digital Twin Consortium’s definition. “It states that, within the digital twin, there are several digital models that correlate with each other. It clarifies that digital models can be 3D measurements, 3D CAD models, mathematical equations, etc. We fit in this 3D measurement part. In these virtual representations, you have several models that correlate with each other, and they communicate with each other.”

Spatial data will play a key role in digitalizing manufacturing facilities

Increasingly, most physical devices have a digital twin. However, Lachauer says, very few manufacturing facilities or assets are fully represented in this way. Spatial data is a key component of a digital twin in manufacturing to give generated data a visual context coming from the real factory. Especially where several sub-processes are distributed around an indoor space, with NavVis equipment and systems providing a straightforward way for operators to gather that data on a regular basis, to keep track of changes in the physical layout of the factory, and update the digital twin as necessary.

As we saw earlier in this article, the definition of digital twins provided by two of the most influential bodies in the industry agree in most respects. They both require the presence of data that identify the various components of a manufacturing process and do not change while the process is in operation, while also requiring the collection of changing data regarding the operation of those components. These changing data are analyzed to provide insights into how the process can be optimized in whatever fashion is most important to its operators, and both physical and software components contribute to the composition and operation of the digital twin.

One major difference between the two definitions lies in whether the operating data is used to instantaneously update the twin, or if updates are scheduled at regular intervals. NavVis provides systems for gathering and visualizing spatial data to help define the physical layout of the space housing the manufacturing process and can also be used to update the physical model underpinning a digital twin if changes are made in that layout. In this way, it becomes one of the software components contributing to the digital twin.

Wrapping up

In subsequent articles, readers can learn how digital twins can improve the performance of manufacturing facilities and operations, and how spatial data – such as that provided by NavVis - can be a crucial component of this.

Would you like to learn more about the NavVis Digital Factory Solution? Contact us for more information, or download a copy of our free guide to getting started with your digital factory implementation.

Sources

- Gartner Research: Use 4 Building Blocks for Successful Digital Twin Design (Authors: Benoit Lheureux, Yefim Natis, Alfonso Velosa, Marc Halpern. Refreshed 7 October 2021, Published 9 June 2020), https://www.gartner.com/en/documents/3986128/use-4-building-blocks-for-successful-digital-twin-design

- Digital Twin Consortium Defines Digital Twin (Authors: Sean Olcott, Casey Mullen. Published 12 March 2020), https://blog.digitaltwinconsortium.org/2020/12/digital-twin-consortium-defines-digital-twin.html